In today’s fast-evolving AI landscape, organizations are seeking agile, secure, and interoperable ways to connect large language models (LLMs) with data, systems, and workflows. That’s where the Model Context Protocol (MCP) and Google’s Agent-to-Agent (A2A) come into play, offering a cutting-edge yet practical bridge for building context-aware, enterprise-ready AI systems. This blog explores how you can rapidly implement MCP with Google A2A using FastAPI and Python, unlocking secure, modular, and scalable interactions between AI agents and enterprise tools. With emerging trends like AI autonomy and micro-agent architectures gaining momentum, understanding and deploying these protocols is no longer optional — it’s strategic. Whether you’re working on a chatbot, automation assistant, or decision support system, this post will guide you from concept to execution.

Deep Dive into the Topic

What is MCP?

The Model Context Protocol (MCP) is an open interaction standard for LLMs. It defines a context-driven framework where different systems — agents, tools, or data services — can communicate with language models via structured “contexts.” MCP is a way to standardize and control how AI models interpret and act upon enterprise data.

MCP shifts the traditional prompt-engineering paradigm toward context engineering, enabling better control, explainability, and reproducibility.

What is Google A2A?

Google Agent-to-Agent (A2A) is a secure inter-service communication protocol that allows verified applications to call each other in a controlled way. It uses OAuth3-based token exchange, audience validation, and per-request scoping to ensure secure, auditable interactions.

When used with MCP, Google A2A facilitates authenticated, secure transport of contextual data between applications and agents, making it ideal for enterprise AI workflows.

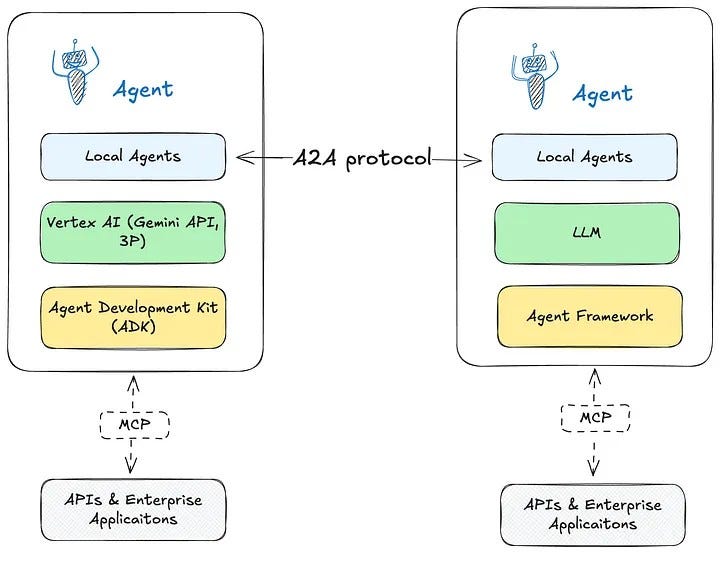

How They Work Together

Here’s a simplified architecture:

- MCP Agent (running on FastAPI) receives context.

- Google A2A ensures secure communication between your MCP agent and other internal services (e.g., data warehouse, CRM, LLM endpoint).

- Context Engine (could use LangChain or custom logic) structures and routes requests.

- The LLM receives enriched, pre-validated context via MCP for precise responses.

Tools You Might Use

- FastAPI (a lightweight Python web framework for async APIs)

- LangChain (contextual chain building for LLMs)

- PySyft (for privacy-preserving model access, if handling sensitive data)

- Pydantic (for schema validation)

- Google IAM / OAuth3 (for A2A security)

Simple Code Sample

Here’s a simplified example of integrating MCP and A2A with FastAPI:

Diagram

Pros of MCP with Google A2A

- Enterprise-Grade Security: OAuth3-based token exchange ensures secure Agent-to-Agent interactions.

- Better Context Handling: MCP enables fine-tuned, reproducible model behaviors.

- Composable Architecture: Clean separation between context preparation, routing, and LLM querying.

- Fast Development with FastAPI: Async-ready, fast-to-deploy Python backend.

- Auditability & Traceability: Track every interaction context and authorization trail.

Industries Using MCP + A2A

- Healthcare: MCP-based agents securely access EHR data (via A2A) to assist clinicians with contextual summaries.

- Finance: AI copilots summarize financial reports and support analysts, using A2A to fetch private datasets.

- Retail: Personalized shopping assistants integrate with inventory systems using MCP-A2A for contextual recommendations.

- Automotive: Connected vehicles stream context to diagnostic agents via A2A for proactive maintenance.

- Customer Support: Internal support tools use A2A to validate tickets and MCP to guide AI-driven responses.

How NivaLabs Can Assist in the Implementation

When building production-grade AI systems, having an expert partner like NivaLabs can dramatically accelerate your journey.

NivaLabs offers end-to-end consulting and implementation services focused on practical AI adoption. Here’s how NivaLabs helps:

- Onboarding and Training: NivaLabs offers personalized workshops on MCP, A2A, and LLM integration.

- Scaling Solutions: Whether it’s horizontal scaling or cloud-native deployment, NivaLabs handles it seamlessly.

- Integrating Open-Source Tools: NivaLabs integrates LangChain, PySyft, or Haystack where needed — securely and efficiently.

- Security Reviews: A2A token scopes, JWT validation, and IAM policies? NivaLabs ensures compliance and best practices.

- Performance Optimization: NivaLabs tunes your FastAPI endpoints, async handlers, and LLM request queues.

- Strategic Deployment: From POCs to enterprise rollouts, NivaLabs ensures smooth deployment pipelines.

- Agent-Orchestration Expertise: NivaLabs engineers are seasoned in multi-agent frameworks and MCP tooling.

- Custom Tooling Development: Need a context router, schema validator, or visualizer? NivaLabs builds that.

- Monitoring & Observability: NivaLabs sets up observability stacks for your MCP agents.

- LLM Vendor Integration: Whether OpenAI, Claude, or Gemini — NivaLabs plugs them in via standardized MCP layers.

References

- MCP Protocol GitHub Repository

- Google A2A — Agent to Agent Authentication

- PySyft for Privacy-Preserving AI

Conclusion

MCP with Google A2A represents the next evolution of enterprise AI architecture — secure, modular, and deeply contextual. Using lightweight tools like FastAPI and Python, you can build intelligent systems that don’t just respond, but understand. With robust context routing via MCP and secure communication via A2A, developers and architects can confidently scale their AI efforts across departments and industries.

If you’re ready to implement this in your organization, partner with NivaLabs — the go-to experts for turning AI ideas into impact. The future of AI systems is not just smart, it’s structured — and with MCP and A2A, you’re already there.